Bishop vs Knight: The Eternal Battle

Which piece is better: the knight or the bishop? (A question as old as time itself)Today you will be looking at the scientific research.

We will be dealing in facts, not opinions.

We don't want somebodys anecdotes about that time they got smothered mated or when they got greek gifted.

We want the facts.

History

The traditional piece values are:

| Pawn | Knight | Bishop | Rook | Queen | |

|---|---|---|---|---|---|

| Value | 1 | 3 | 3 | 5 | 9 |

In 1617, Chess Player, Historian and Priest Pietro Carrera published Il Gioco degli Scacchi (The Game of Chess). His work was built upon by the Italian Modenese Masters (Ercole del Rio, Giambattista Lolli, and Domenico Lorenzo Ponziani) to deduce the traditional piece values. Lolli discussed this in his book servazioni teorico-pratiche sopra il giuoco degli scacchi (Theoretical-practical views on the game of chess) published in 1763.

But these piece values are not the only ones that were suggested. Many of the historical masters had their own takes. They were around the same range as the traditional values, but more detailed. François-André Danican Philidor went down to 2 decimal points in 1817:

| Philidor | Pawn | Knight | Bishop | Rook | Queen |

|---|---|---|---|---|---|

| Value | 1 | 3.05 | 3.50 | 5.48 | 9.94 |

Bobby Fischer gave the Bishops value as a quarter of a pawn higher than the knight (3.25 vs 3).

Garry Kasparov gave the bishop as 3.15 to the Knights' 3.

Neural Nets (Gupta et al.)

Let us start with a study in 2023 (Gupta et al.) that looked at the advantage that the bishop and the knight gave by square. So what is the predicted centipawn advantage when a (white/black), (bishop/knight) is on each square. A centipawn is worth 0.01 in eval. A +1 advantage is 100 centipawns. They did this through neural nets. So the input is (Colour, Piece (B/N), Square). The output is the centipawn advantage.

They used over 2000 Grandmaster games to train the neural net. A neural net is made of layers. The connections between neurons have a weight and the output of a neuron is also determined by a bias. The weight represents the strength of the connection and the bias is a constant value that is added on to give the output of a neuron. The neural net would then learn through reducing the error of its output.

Here are the results:

Predicted Centipawn Advantage from Position of White Bishop and Knight.

Predicted Centipawn Advantage from Position of White Bishop and Knight.

If you look across the board, the numbers seem to be about the same. However look at the kingside! The Bishops show a much greater value. Probably because Kingside bishops can act as lasers, while knights lounging near the base of the board doesn't do much due to their short range.

The a2 square is surprisingly better for a knight than a bishop. Maybe because a bishop going from c4 to a2 is a waste of tempo and then the bishop is locked on the a2-f7 diagonal which means that if the diagonal doesn't open up, that it could be stranded and out of the game.

Another reason a2 is better for a knight could be because if a knight is on a2 in a Grandmaster game, thats probably because it has gone there in the Sicilian Najdorf Variation. In this variation a thematic manoeuvre is Nb3-c1-a2-b4-d5 to control the d5 square.

But that's just one square. Overall, the bishop is showing more advantage than a knight.

You'll also notice that Black's Kingside squares are more advantageous than Black's Queenside squares for White due to the pressure on the Black King, which is more vulnerable to an attack. The Knight has a little advantage on the g5, h5 and h6 squares. But the Bishop is a bit better in the center surprisingly (since Knights are supposed to be in the center usually, but I guess if a Bishop can comfortably sit in the center, than something went wrong for Black). Intriguingly, the a file on the Queenside is a hot spot.

Now let's look at the centipawn advantage for the Black Bishop and Black Knight by square.

Predicted Centipawn Advantage from Position of Black Bishop and Knight. Note: we are looking from White's perspective. Left Bottom Corner is the a1 square. Numbers start off negative as Black usually has lower centipawn advantages than White as White moves first and has a slightly higher win rate.

Predicted Centipawn Advantage from Position of Black Bishop and Knight. Note: we are looking from White's perspective. Left Bottom Corner is the a1 square. Numbers start off negative as Black usually has lower centipawn advantages than White as White moves first and has a slightly higher win rate.

As Black's pieces move towards the White Kingside their advantage increases. You can see that it's actually the Black Knight which has a higher advantage than the Bishop when in the White Kingside. This is probably because a Black Knight is more of a dangerous attacker than the Black Bishop.

As expected, piece in the Black corners are the worst (a8 and h8). The Black Bishop seems a bit stronger on the Black Kingside and Queenside than the Knight. But the Knight is stronger of the White Queenside. Interestingly, the Bishop is at it's strongest in the middle/right center.

Overall we can't say for sure which piece is better based on these heatmaps because we have to weigh in the frequency with which each square can be accessed. Eyeballing it seems to show that maybe the Bishop has the upper hand but we need a more rigorous approach.

How to find the piece values

Now we shall discuss ways in which the single piece values have been deduced. You'll be interested to know how piece values can actually be calculated. We want to know their 'worth'. But how do we define 'worth'? Well, in the previously described study they did it by seeing the centipawn gain when a knight/bishop is located on a certain square through machine learning. But how do we find single values for across games.

The way forward is to define worth as the result of a game. So maybe we can see whether having a certain piece leads to more wins. Ok..., but how exactly do we do this? What's the method? It ain't that easy to come up with an answer.

The way forward is to find values for pieces in a formula, where the variables are the piece values, that leads to the most accurate prediction of a game result. But what formula?

Here is one:

Game outcome = a*(pawn difference) + b*(knight difference) + c*(bishop difference) + d*(rook difference) + e*(queen difference) + c

e.g. pawn difference = White pawns - Black pawns.

Note: The reason there is a '+ c' at the end is that it makes sure the function aligns to the y axis. That's why we have y = mx + c, the c shifts the function to match the y values.

So we need to know the values of the coefficients a, b, c, d and e. These are the impacts that a change in the number of that piece has. So a greater coefficient means that an increase in the difference of that piece between opponents has a bigger impact on the winning chance of white.

Those coefficients are the piece values themselves, because they show how much each piece contributes to affecting the winning chance. But how do you find the coefficients a,b,c,d,e (aka pawn value, knight value, bishop value, rook value, queen value)?

There are two ways of finding the piece values: Neural Nets and Logistic Regression.

Neural Nets (AlphaZero)

In 2020, The DeepMind Crew used AlphaZero's neural net to find piece values. The piece values were the coefficients. The predicted outcome was a function of a vector of the difference in pieces and a bias multiplied by a vector of the weights (piece values). A bias is just a number added on to make the formula work as described before, like the intercept in y = mx+c.

That's just: Predicted game outcome = a*(pawn difference) + b*(knight difference) + c*(bishop difference) + d*(rook difference) + e*(queen difference) + c

Also the predicted outcome was put in a hyperbolic tangent function. Where tanh(x) = sinh(x)/cosh(x) = (e^x − e^−x) / (e^x + e^−x). This makes the values range from -1 to 1, to align with the actual game outcomes (1=win, 0=draw, -1=loss).

A basic neural net. Wikipedia

A basic neural net. Wikipedia

The squared difference of the game outcome (win, draw, loss represented by 1,0, -1) minus the predicted outcome is the loss function. The way to find the best piece values to predict the outcome was through minimizing the loss function by adjusting the weights (piece values). Minimizing the loss function involves finding the lowest gradient of the function (0 slope indicates a local minima, where the loss function is at its lowest). This is done through gradient descent. The error is sent back through previous layers so that the weights and biases can be adjusted (backpropagation). Through reinforcement (AlphaZero playing 10,000 games against itself with 1 second per move), the piece values will become more accurate.

Here's what they got:

| AlphaZero | Pawn | Knight | Bishop | Rook | Queen |

|---|---|---|---|---|---|

| Value | 1 | 3.05 | 3.33 | 5.63 | 9.5 |

They noted that this may not represent human play since the values are derived from AlphaZero Games which are stronger and longer.

Logistic Regression (@ubdip)

So back to formulas. We can also use a logistic regression formula. But what is regression?

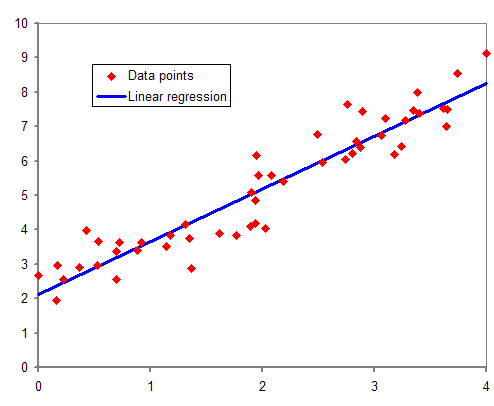

Regression is a way of predicting what the dependent variable/s is from the independent variable/s. The Independent variable is always on the x axis by convention.

The function gives the best prediction by minimizing the difference between the line and the points through the method of the least squares. Wikipedia

The function gives the best prediction by minimizing the difference between the line and the points through the method of the least squares. Wikipedia

That is the line of best fit, found by making the difference between the line and the points as low as possible. The line is in the form of y=mx+c, m is the slope and c is the intercept. A change in x causes a change in y. When the slope is greater, the same change in x will produce a greater change in y.

This is an example with one independent variable. We can also use multiple independent variables. Just like in the formula shown before:

Game outcome = a*(pawn difference) + b*(knight difference) + c*(bishop difference) + d*(rook difference) + e*(queen difference) + c

e.g. pawn difference = White pawns - Black pawns.

So we just do the regression show earlier, we find the values which make the formula predict whether white wins most effectively.

But wait a minute! The dependent variable (whether white wins) is binary. It's either win or not win. It's not continuous. So you won't be able to get a smooth continuous function. So what we do?

The answer is to use a logistic curve. Here's what it look's like:

This image is just a general view of the function, not specific to what will model the chess game outcomes. Wikipedia

This image is just a general view of the function, not specific to what will model the chess game outcomes. Wikipedia

As you can see, it basically imposes a s-shaped function between 0 and 1. This allows a continuous function to be created for binary outcomes. This 's' shape also represents how a game outcome can change as the piece difference changes from one side to the other.

The logistic curve is y=1/(1+e^-x). The 'y' is the game outcome, win or loss. The 'x' is the independent variable. Our independent variable are the difference in pieces. That can be modeled by a*(pawn difference) + b*(knight difference) + c*(bishop difference) + d*(rook difference) + e*(queen difference). So in the end we have

y=1/(1+e^-(a*(pawn difference) + b*(knight difference) + c*(bishop difference) + d*(rook difference) + e*(queen difference)))

So we need to find coefficients (piece values) that will predict the game outcomes most accurately.

The way to do that is by using computer software (because it can calculate the piece values through something called maximum likelihood estimation).

In 2022, Lichess developer @ubdip released a blog about how they used 100,000 positions from generated engine games to find out the values of the pieces. They used logistic regression as described previously. They added a side to move offset in the formula to account for which side can move first. Also they omitted positions where the previous move changed the material balance to avoid noise in the data.

Here's what they got:

| @ubdip | Pawn | Knight | Bishop | Rook | Queen |

|---|---|---|---|---|---|

| Value | 1 | 3.16 | 3.28 | 4.93 | 9.82 |

Logistic Regression (Gupta et al.)

There was a study done in 2023 (Gupta et al.). They used a dataset of 553,631 games played here on Lichess in order to determine the values of the pieces. The games were mostly in the 1250-2250 rating range (Lichess rating). They used logistic regression as described above. They did multiple estimates of piece values. The methods were taking the piece values from the exact mid points of games as one try and also using the piece values at the end of the game. And they did this for Classical, Blitz, and Bullet.

To find the accuracy of the predictive piece values, they used 80% of the games to find the piece values and used the remaining 20% to test how accurate the piece values predicted the result of a game.

Here's what they got (normalized for the Knight since normalizing for the pawn gave values that didn't resemble the traditional piece values):

| Gupta et al. | Pawn | Knight | Bishop | Rook | Queen | Accuracy |

|---|---|---|---|---|---|---|

| Final: Combined | 1.43 | 3 | 3.30 | 4.56 | 8.92 | 89% |

| Middle: Combined | 1.36 | 3 | 3.70 | 4.86 | 8.40 | 63% |

| Final: Bullet | 1.70 | 3 | 3.40 | 4.73 | 9.64 | 90% |

| Final: Blitz | 1.41 | 3 | 3.32 | 4.56 | 8.84 | 89% |

| Final: Classical | 1.39 | 3 | 3.26 | 4.55 | 9.05 | 89% |

They concluded that values were close to the traditional values when using final positions. They also said that logistic regression done of positions midway through a game was not an accurate way (only 63%) of determining piece values due to the fact that the other half of the game still needs to be played and a piece difference can be compensated with another type of advantage. Also the fact that changes in material balance through captures and promotions weren't mentioned as having been corrected for seems to be a likely factor. (@ubdip corrected for this is their analysis).

As the authors stated, normalizing to the pawn gave piece values that were quite different from the traditional values:

| Gupta et al. | Pawn | Knight | Bishop | Rook | Queen |

|---|---|---|---|---|---|

| Final: Combined | 1 | 2.09 | 2.30 | 3.18 | 6.22 |

So they normalized using the knight as a baseline, giving similar values to the traditional ones (with the exception of the pawn).

Are the values different because piece values are lower for beginning players compared to GMs/Engines due to less ability to make use of the potential of the pieces? (1250-2250 Lichess is roughly about 1400-1900 FIDE). Or is it because of the methodology of the study which took positions at the end of the game? (The authors said that the pawn may be worth more at the end of the game). But it was also the same story for the middle game positions so maybe the values do vary with the players level?

| Gupta et al. | Pawn | Knight | Bishop | Rook | Queen |

|---|---|---|---|---|---|

| Middle: Combined | 1 | 2.21 | 2.73 | 3.58 | 6.19 |

But then again, this model had a low accuracy of 63%. It's a mystery.

Verdict

The Evidence:

- Heatmaps generally show advantage for White Bishop on White Kingside and Black Bishop on Black Kingside and Queenside. Advantage for Black Knight in White Kingside as well as White Queenside. Can't give definitive answer, although since far advanced pieces less common, the predominance of a Bishop advantage on the home front seems to weigh in favour of the bishop. But of course this is more for fun then an admissible piece of evidence. I showed this study because seeing the effect of the squares is cool.

- AlphaZero values: 3.33 for Bishop, 3.05 for Knight

- @ubdip values: 3.28 for Bishop, 3.16 for Knight

- Gupta et al. values: 3.30 for Bishop, 3 for Knight (Classical values, normalized to Knight.) (All other formats also show a Bishop advantage).

The individual pieces of evidence add up together.

Verdict: This Bishop is Better than a Knight

Of course the question is why? We know some things about the (general) conditions that are the best for each piece.

Bishop: Open Positions, Pawns on Both Sides.

Knight: Closed Positions, Pawns on One Side.

One of the defining features of Bishop vs Knight is the fact their paths match. This allows a bishop to dominate a knight. A knight could dominate a bishop, but since a bishop is faster this makes the bishop have an advantage in the domination game.

This is a plausible explanation, but probably there's more factors. There still needs to be an analysis of the factors that make a bishop better overall. Maybe there are just more open games in general?

@ubdip estimated the bishop pair advantage at 0.46 in the midgame and 0.63 in the endgame. Probably another factor in the Bishop superiority. Because having a Bishop can allow the possibility of a Bishop pair multiplier combo.

In the end, the definitive answer for why a Bishop is better than the Knight will be a bunch of factors with different weighing. Maybe neural nets can figure this one out.

It is said that as you get wiser, your fear of the knight decreases and your fear of the bishop increases.

Amateurs are known to fear the horse, because the horse can be tricky.

But the results of the 1250-2250 Lichess range show that Bishops are still worth more than Knights.

The laser pointing at f2/f7 and h2/h7 should not be underestimated.

Anyway.

TL;DR Bishop Better.

Visit Blog Creators Hangout for more featured blogs.

Visit Study Creators Hangout for more featured studies.

You may also like

RuyLopez1000

RuyLopez1000The Dark Truth Behind Lichess Studies (You are in the Matrix)

Study rankings are manipulated by 'those who know'. They may be friends, training partners or coache… thibault

thibaultHow I started building Lichess

I get this question sometimes. How did you decide to make a chess server? The truth is, I didn't. CM HGabor

CM HGaborHow titled players lie to you

This post is a word of warning for the average club player. As the chess world is becoming increasin… RuyLopez1000

RuyLopez1000One of The Greatest Games Ever Played

Yuri S. Gusev vs E. Auerbach 1946 ChessMonitor_Stats

ChessMonitor_StatsWhere do Grandmasters play Chess? - Lichess vs. Chess.com

This is the first large-scale analysis of Grandmaster activity across Chess.com and Lichess from 200… RuyLopez1000

RuyLopez1000